Elon Musk has once again positioned himself as a disruptor, this time targeting the world’s largest source of public knowledge: Wikipedia. While his criticisms regarding the platform’s political and ideological biases hold merit, his proposed solution—a potential AI-driven knowledge base often dubbed 'Grokipedia'—is fundamentally flawed by the very problem it seeks to solve: the ineluctable bias inherent in all knowledge production.

Musk’s complaints, often aired on his social media platform X, typically focus on what he perceives as a left-leaning bias, particularly on politically charged topics or articles related to his own companies and interests. He asserts that Wikipedia’s reliance on human editors, who often lean progressive or operate under specific organizational dynamics, results in a skewing of the "neutral point of view" (NPOV) that the site ostensibly upholds. This critique resonates with many who feel that knowledge systems, including search engines and encyclopedias, are increasingly filtering information through certain political or cultural lenses.

The Human Problem, Not the Platform Problem

The core issue that Musk and other critics fail to fully address is that Wikipedia's bias is not primarily a technical failure of its platform, but a reflection of its volunteer base and the mainstream sources upon which it relies. Wikipedia's articles are built on published, reliable sources. If the majority of sources across academia, mainstream media, and established professional fields exhibit a certain structural bias, then Wikipedia, by faithfully summarizing those sources, will inherit that bias.

Musk’s vision for a replacement, presumably built around his xAI project and its Grok large language model, promises a more "truthful" or "unfiltered" representation of facts. However, this optimism ignores the fundamental challenge of building unbiased AI.

The Inescapable Bias of AI Training Data

An AI-powered knowledge base like Grokipedia would face an immediate and insurmountable obstacle: its training data. Large Language Models (LLMs) are constructed by digesting astronomical amounts of text scraped from the internet, books, and public archives.

Data Inheritance: The internet itself—the primary feedstock for any LLM—is a repository of all human biases, including those present in Wikipedia, academic papers, and news articles. Grok's model will simply absorb and statistically reflect the biases already dominant in the text it reads. It cannot magically extract an objective truth untainted by human perspective.

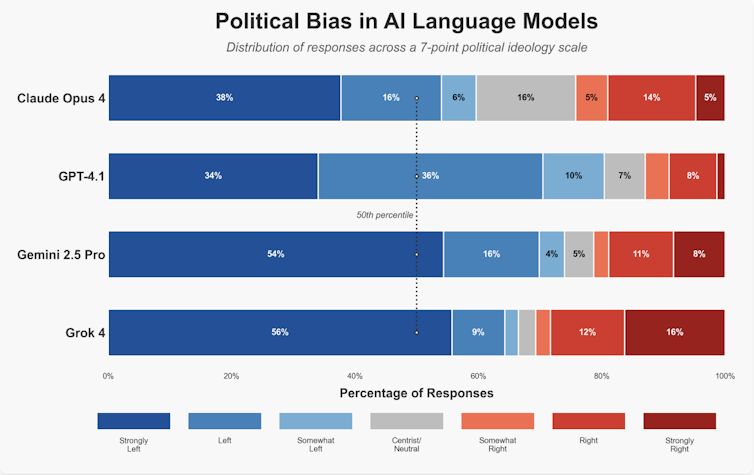

The Filter of Fine-Tuning: Furthermore, once the base model is trained, it must be fine-tuned. Fine-tuning involves human reviewers and specific instructions (the "system prompt") designed to make the AI safe, helpful, and aligned with certain values. These human choices—deciding what constitutes hate speech, political neutrality, or factual accuracy—are themselves value judgments and introduce a powerful layer of intentional bias. Musk has frequently advocated for reducing what he terms "woke" guardrails in AI, but removing one set of explicit filters merely means replacing them with another set of implicit ideological preferences held by the developers and engineers at xAI.

The Danger of a Single Authority

Perhaps the greatest peril of Grokipedia is the potential for consolidation of knowledge power. Wikipedia, despite its flaws, is a decentralized, consensus-driven platform. Disputes, even if heated, are public, and editorial changes are transparently logged.

Grokipedia, tied to a single, proprietary AI model, would be a black box of knowledge production. If a user queries a controversial topic and receives a biased answer, there is no public discussion, no "Talk" page, and no transparency regarding why the AI arrived at that conclusion. The final answer would simply be presented as authoritative truth, backed by the immense technological credibility of Elon Musk’s AI empire. This shift from a messy, community-validated system to a centralized, opaque authority poses a far greater threat to intellectual freedom than the biases found in Wikipedia’s current structure.

In essence, while Musk is correct to question the neutrality of existing information monopolies, attempting to replace a community-governed system with a for-profit, centralized AI system, whose training data is inherently biased, is akin to replacing one set of human editors with another, far less transparent, set of algorithms and programmers. The battle for truly neutral knowledge production cannot be won by technology alone; it must be won through diverse human participation and radical transparency, qualities that Grokipedia is unlikely to possess given its corporate and ideological origins.

No comments:

Post a Comment